Many mornings, the first thing I do is drink coffee out of my FTC mug. I worked at the FTC for three years. There, I helped the smartest career civil servants and political appointees I have ever met fight for the American people. They inspired me to see the law as a force for good and convinced me to go to law school. These days especially, that mug reminds me that good public servants can do good things in this government. The logo on that mug, the FTC’s winged flywheel, represents the role the FTC plays to ensure fairness in the American economy. No longer.

On Wednesday, that logo graced the top of a letter announcing an investigation into Media Matters for America. That investigation can only be fairly considered a politically motivated threat against an advocacy group opposed to the current administration. The investigation seems to concern Media Matters’ purportedly colluding with advertisers to deprive Elon Musk’s Twitter (renamed by Musk “X”) of ad revenue. The investigation seems prompted by Musk’s own suit about the issue—which is a blatant attempt to interfere with Media Matters’ First Amendment rights.

Needless to say, the investigation is without merit. Media Matters’ efforts to stop hate speech on Musk’s platform are in no way the type of horizontal collusion cognizable under the antitrust laws. Indeed, there is no mention of collusion, nor any claim of antitrust violation, in Musk’s original suit. (The suit instead claims that Media Matters interfered with Twitter’s contracts with advertisers, disparaged the quality of the platform.) The legality of such an attempt to coordinate a political boycott was settled long ago in NAACP v. Claiborne Hardware, a case concerning a boycott of racist businesses during the Civil Rights Era. Even the 5th Circuit has acknowledged the danger of this suit—specifically the potential for coercion or intimidation Musk’s discovery efforts to get the names of Media Matters’ donors poses. Under the first Trump administration, the Justice Department pursued a similar theory of coordination among carmakers that reached an accord with California over emission standards—a clear act of political lobbying exempted from antitrust scrutiny under the Noerr-Pennington doctrine. (The case was smartly withdrawn in the early days of the Biden administration.) Despite this background and clear caselaw, the FTC has decided to follow Musk’s vendetta and use its limited resources to pursue a claim with little-to-no chance of success.

Having worked in the agency recently, I am often asked by law school professors and classmates what I think will happen to antitrust enforcement at the FTC during the second Trump administration. Generally, I have said that I assume that not too much will change. The agency’s Republican leaders generally support strong enforcement—for example, Mark Meador is on the record as supportive of the Robinson-Patman Act (RPA), a law hated by big business. (To my disappointment, Meador voted to withdraw the FTC’s pending (meritorious and well-researched) RPA claim against PepsiCo.) In many areas of federal law enforcement, the presumption of regularity has already broken down for this administration. I hoped (though perhaps did not expect) the FTC would be different. That an agency with a history of independence would remain so. That is, after all, what happened during the first Trump administration.

I was wrong. Wednesday’s actions, likely directed by Chair Ferguson and his staff, indicate that the Chair of the FTC plans to use his agency as a weapon in Trump’s antidemocratic arsenal. Ferguson will, presumably in pursuit of Trump’s favor and Musk’s political war chest, sacrifice the goodwill the agency has built up with the judiciary and the public over the decades. I fear that after this administration is over, the agency where I started my career will be a shadow of what it once was—depleted of all its credibility, quality staff, and legitimacy. The Wall Steet Journal editorial page lambasted Lina Khan for “politicizing” antitrust by trying to dismantle concentrations of economic power—even though dismantling such concentrations was the original purpose of the FTC Act. Yet Khan’s FTC never once used its power to harass a political enemy. But that’s precisely what Ferguson’s FTC is doing.

The anti-monopoly community cannot continue to behave as if business-as-usual will continue at the FTC and DOJ. We cannot continue to segment anti-monopoly policy off from the rest of the Trump administration’s authoritarian actions. Under this administration, there is only one policy issue—democracy protection. We should do everything in our power to make sure that agencies like the FTC are not wielded as weapons of authoritarianism. If that means sacrificing the credibility of the agency I love, so be it.

Bryce Tuttle is a student at Stanford Law School. He previously worked in the office of FTC Commissioner Bedoya and in the Bureau of Competition.

Back in 2011, ESPN and the NCAA agreed to a $34 million per year media deal that gave ESPN the right to broadcast championships in 29 different college sports. The list of sports included every single college sport played by women. As time went by, it became increasingly clear this media deal dramatically undervalued the rights to women’s sports. After all, in 2024 dollars, that 2011 deal would only be worth $47.6 million, or just $1.64 million per sport (equal to $47.6 million divided by 29 sports). A report commissioned by the NCAA itself in 2021 argued that the media rights to women’s college basketball alone were worth between $81 million and $112 million.

In 2023, when the 2011 media rights deal was finally expiring, the NCAA had an opportunity to collect far more money. And in January of 2024, the NCAA proudly announced that this mission had been accomplished! NCAA President Charlie Baker told the Associated Press: “Yes, it’s a bundle, but it’s a bigger bundle that will be much better.”

Yes, that’s the quote. And yes, the NCAA agreed to a bigger and better bundle that is going to be much better!! Problem solved!

Not exactly.

When we delve into the numbers, however, we do see the agreement is technically bigger. Previously, the NCAA had a 14-year deal that paid $34 million per year, or $476 million across the entire agreement. The new deal is worth $920 million over eight years, or $115 million per year. Yet the new deal also covers 40 sports (up from 29 previously). So, it might look like the NCAA is now getting $2.875 million per sport (equal to $115 million divided by 40 sports). Or as Baker said, “bigger and better!”.

But the math doesn’t quite work as Baker’s quote suggests. Remember, the report given to the NCAA in 2021 said that women’s college basketball is worth between $81 and $112 million. The NCAA and ESPN ultimately didn’t agree with that value. Baker and the NCAA did hire a media consultant (Endeavor’s IMG and WME Sports) that “estimated about 57% of the value of the deal — or $65 million annually — is tied to the women’s March Madness tournament.”

That isn’t quite $81 million per year. But the people at Endeavor said they were pretty sure that the 2021 report overestimated the value of women’s college basketball. If we take Endeavor at their word (they didn’t show their math!), we learn something odd about all the other sports played by women. Remember, back in 2011, the NCAA sold the media rights to 29 sports for $34 million per year. Once again, in 2024 dollars, that worked out to $1.64 million per sport. If we believe Endeavor, then the right to women’s college basketball sold for $65 million and the rights to 39 sports that are not women’s college basketball were sold for $50 million ($115 million less $65 million). That means, all the other sports were valued at $1.28 million each in 2024 (equal to $5o million divided by 39).

And that means, according to the NCAA and its media consultants, the value of women’s volleyball, women’s gymnastics, and softball all went down from 2011 to 2024!

One has to wonder how that could be possible. After all…

- The NCAA Women’s College World Series Finals in 2024 attracted 2 million viewers. This was up 3% from the previous ratings record set in 2015.

- The 2023 NCAA championship match in women’s volleyball attracted a record-setting 1.69 million viewers.

- In 2022, the NCAA women’s gymnastics final was bumped to an earlier start to accommodate an NHL broadcast. The women’s final attracted 922,000 viewers, a mark that was 15% higher than the NHL broadcast in the more favorable time slot.

To put all these numbers in perspective, the NHL averaged about 500,000 viewers per regular season game in 2023-24. And the Stanley Cup playoffs in 2024 averaged 1.8 million viewers per game. For these ratings, Disney (parent company of ESPN) and Turner (parent company of TNT) agreed to pay $625 million per year to the NHL.

Remember, ESPN got all of women’s college sports—and much of men’s college sports (except for football and men’s basketball)—for just $115 million per year. How could the NHL package be worth five times what we see for women’s college sports? And how could the rights to men’s college basketball be worth $1.1 billion per year, while the rights to women’s college basketball are only valued at $65 million? After all, the women’s basketball final in 2024 actually attracted nearly four million more viewers than the men’s final.

And once again, how did the value of women’s volleyball, women’s gymnastics, and softball actually go down?

All of this suggests that the NCAA left quite a bit of money on the table. For people who have only heard the story about markets primarily told in ECON 101, this must seem impossible. It reminds one of a very old joke told by economists:

Two economists are walking down a street and see a $20 bill lying on the sidewalk. The first economist says, “Look at that $20 bill.” The second says, “That can’t really be a $20 bill lying there, because if it were, someone would have picked it up already.”

This isn’t exactly funny (economists aren’t known for their ability to tell jokes!). But this story does capture a fundamental idea for many economists. Decision-makers tend to be rational, and markets tend to be efficient. Therefore, money is not left on the table (or the sidewalk!).

This view isn’t just prevalent among economists. At least, a story that likely started with economists tends to be believed by people everywhere. If you tell someone that a leader in business made a mistake that costs millions, you will immediately be asked: “How is that possible?”

There is a very simple answer to that question. Human beings don’t always try their hardest and can make mistakes. And markets, which can at time force people to try harder and correct their mistakes, are often not very efficient.

This is especially true when markets are not competitive. As Adam Smith observed back in 1776: “Monopoly… is a great enemy to good management.“

As economists have known for decades, the NCAA is a monopolistic cartel. One of the many problems with monopolies, as Adam Smith understood, is that the people who lead monopolies don’t have to be good managers.

This appears to be the story with how the NCAA sold the media rights to women’s college sports.At the very end of the article detailing the NCAA’s media right deal was this sentence: “The deal was also struck within ESPN’s exclusive negotiating window and never brought to the open market.”

And there’s our answer.

Charlie Baker and the NCAA didn’t shop the rights to women’s college sports. Markets can be efficient when there is competition. But if you take away the competition, the power of markets vanishes.

In contrast to the NCAA, the NHL shopped their rights to multiple companies and got multiple offers. Baker and the NCAA didn’t get a very good deal because they only bothered to negotiate with one company (ESPN), leaving out potential bidders such as Turner, Amazon, and Netflix. Yes, the NCAA did get more for women’s college basketball. But it doesn’t look like they got as much as they could have. And one doesn’t have to be a math major to see that the NCAA managed to get less for women’s volleyball, women’s gymnastics, and softball than they were getting before. Apparently, no one with the NCAA managed to take a few moments to break out a calculator to see that this happened.

How is that possible? Once again, monopolies are the enemy of good management. If a small farmer in a competitive market makes a serious mistake, there is a good chance the farmer goes out of business. Competition can be a very harsh teacher.

But Charlie Baker and the NCAA are not small farmers. The NCAA isn’t going to go out of business because they failed to negotiate a very good deal for women’s sports. The NCAA will continue to exist and likely continue to tell us that women’s college sports doesn’t generate much revenue. Of course, that isn’t true. Women’s sports do, in fact, generate substantial revenue. But right now they are doing this for ESPN. As Lindsey Darvin at Forbes recently reported, by January, advertising for the broadcast of the women’s March Madness had already sold out. Advertisers know there are going to be millions of viewers for the women’s college basketball championship, and they definitely are willing to pay ESPN to address that audience.

But the women in college sports aren’t going to see all that money. Charlie Baker decided to leave it on the table and prove Adam Smith was right!

The shooting of its CEO has flung UnitedHealth Group (“UHG”) into the American zeitgeist, and there’s been no shortage of heated opinions on what to make of it. With the tragedy nearly two months behind us, perhaps we can now reflect, dispassionately, on the real diagnosis here: UHG has been monopolizing and “monopsonizing” American health care. Agreeing with that diagnosis would be Eric Bricker, M.D., who educates extensively about health care finance on his YouTube channel, AHealthcareZ. With its current market cap at nearly $500 billion—close to that of the rest of the top ten health care companies in America combined—Bricker concludes, “UnitedHealth Group essentially is health care in America.”

Indeed, UHG has gone well beyond its roots in health insurance to bill itself now as “a health care and well-being company.” UHG is the Amazon of American health care—like Amazon, it should be viewed as a multi-sided platform in the health care marketplace, where it dominates as operator, participant, and controller of the “pipes” through which much of health care flows. How so? And how to interpret this from an antitrust perspective? Let us count the ways.

UHG: The Operator

Let’s start with UHG’s roots as a health insurance company, UnitedHealthcare (“UHC”). UHC is in effect a financial middleman that operates a transactional network connecting suppliers with purchasers in the health care marketplace. The suppliers are physicians, hospitals, pharmacies, pharmaceutical companies, and the like. In America, the purchasers are largely the government (via Medicare and Medicaid) and employers, who sponsor health insurance for most of those not on Medicare or Medicaid.

As an intermediary, UHC benefits from what economists call “network effects”—the more suppliers and purchasers utilize its network, the more valuable its network becomes. After a series of horizontal mergers with other insurance companies over several decades, UHC now has the largest share (14%) of the highly concentrated commercial health insurance market. Its share is even greater (28%) of the also highly concentrated Medicare Advantage market, the market of private Medicare plans now accounting for over half of the Medicare market overall. UHC makes twice as much in this space as it does in employer-sponsored health insurance. Even in traditional Medicare, UHC dominates as AARP’s exclusive Medicare Supplement plan provider.

But UHC isn’t the only network-effect-exploiting middleman in UHG’s arsenal. Its other main subsidiary is Optum. Optum itself has three business branches: OptumRx, OptumHealth, and OptumInsight. Of the three branches, OptumRx is the cash cow: it is UHG’s pharmacy benefits manager (“PBM”). PBMs have been in the crosshairs of antitrust advocates for years now, and a whole antitrust-related post could be written on this subtopic alone. Suffice it to say here, OptumRx is the third largest of the three PBMs that control 80% of all prescriptions administered in America. And Bricker illustrates well how a PBM like OptumRx sits right in between purchasers and suppliers in prescription drug administration.

The trouble occurs when OptumRx serves two masters: (1) the employer/government who wants the PBM to negotiate the lowest price possible for a given drug; and (2) the drug manufacturer who pays the PBM various “fees,” aka kickbacks, for preferred placement on the PBM’s drug formulary—kickbacks that increase with increasing drug price. OptumRx also requires its PBM to use its own pharmacy for specialty medications, Optum Specialty Pharmacy. As a recent FTC study shows, those specialty medications are an increasingly growing profit center for OptumRx, with the markup on some of them exceeding 1,000 percent. Such conflicts of interest are endemic to the other major PBMs as well. When it comes to interacting with the powerful, concentrated PBMs, the conflicts of interest and restricted choices make for awfully poor quality. (Ask any physician who’s spent hours on the phone trying to get prior authorization for the PBM to cover a prescription, and you will get an earful of Kafkaesque misery.)

At any rate, UHG plays multiple sides of its multi-sided platform in other unique ways. In 2017, Optum acquired The Advisory Board Company and is now the third largest health care consulting firm in America. In this capacity, UHG now consults hospitals on how to get paid more—while its affiliate, UHC, negotiates with those very hospitals to get paid less. With its acquisition of Change Healthcare in 2022 (more on this below), UHG brought Change’s InterQual into its fold. InterQual is one of only two companies in America that control utilization management of hospital beds: how many paid “bed days” should be assigned to a hospitalized patient with a given diagnosis before the insurance payment is cut off. Conflict of interest strikes again, in a market that Bricker estimates at $400 billion per year in health care spend. That’s a huge market to have such concentration of economic power.

UHG: The Participant

We’re not done with UHG’s non-horizontal mergers. In the last decade, UHG has gone on a vertical-integration buying spree, specifically to occupy the health care marketplace not just as a platform middleman but also as a participant. As UHG’s participant arm, OptumHealth has entered the home health care space with its acquisition of the nation’s third largest home health provider (and also a large hospice provider), LHC Group, a merger that passed through initial scrutiny by the FTC. And OptumHealth now employs or is affiliated with the largest number of physicians in the country—90,000 and counting, or a tenth of all physicians in America.

UHG argues that its acquisition of physician practices aligns with so-called “value-based care,” whereby a health care entity bears risk through capitated payments from, say, the government as in Medicare Advantage plans; the entity then makes profits based not on volume of care but quality. But quality improvement may be more rhetoric than reality, as surfaced by local investigative reports of problems post-merger:

These investigative columns have uncovered the healthcare company’s oppressive physician employment contract; a disastrous phone system; urgent care upheaval; alleged double billing; copay confusion; a scathing internal survey; data privacy breaches; attorney general scrutiny; suspect COVID-19 testing charges; predatory marketing tactics; Medicare Advantage-related profiteering concerns; state lobbying efforts; a disconcerting doctor shortage; the troubling mix of healthcare with insurance services; the unethical banning of unwell patients; and the denial of patient medical records.

That’s a hairy list.

In addition, Bricker presents a “fable” that illustrates the risk of vertical foreclosure. An insurance carrier buys a physician practice, which formerly used Vendor A for a particular patient service that charged $300 per patient per day. After the acquisition, the insurance carrier replaces Vendor A with Vendor I, which the carrier owns—and charges the patient $800 per day. Not only that, the insurance carrier and physician practice had agreed on an earnout in which the practice would earn payments based on future profits of the practice post-merger. Having forced the practice to use the more expensive Vendor I, the carrier decreases practice profits and therefore the earnout. Double win for the insurance carrier. Double loss for the physicians and the employers/other billed insurance carriers financing the health care costs, as those costs rise. Hmm…is this fable the real story of UHG?

Texas and many other states forbid the corporate practice of medicine. Yet UHG’s quiet but aggressive gobbling up of physician practices skirts around the prohibition. And while the OGs of the practices do well in the sellout, the rest may just have to deal with decreased earnouts, pay cuts, increased patient loads, layoffs, onerous do-not-competes—in short, to use Cory Doctorow’s word—the “enshittification” of health care. No wonder physicians are burning out in droves, as these vertical integrations curtail their power.

The curtailing of physician power turns into a classic case of monopsony power. At least one health care organization has filed a lawsuit against UHG in California, alleging that, among other things, UHG’s control of the local primary care physician market unlawfully restricted physicians from working for competing networks and taking their patients with them. And as UHG’s monopsony power (along with that of the other big carriers) to push take-it-or-leave-it insurance contracts with independent physicians has grown, many of those otherwise independent physicians have banded together to set up “management service organizations,” in an attempt to increase countervailing power and negotiate better contracts. It’s an arms race to determine who will get a bigger share of the health care pie. The net effect? Increasing prices and decreasing quality for those employers and their workers who seek health care.

UHG: The Pipes

UHG increasingly controls not just the operation and participants of American health care, but also its transmission lines. In 2022, UHG made a bid to acquire Change Healthcare, a company that electronically processed billing claims and remittances between myriad health insurance carriers and the vast majority of hospitals and doctors in America. Change also ran a quarter of another pipe in health care: the “switch” software connecting pharmacies with plan information from all the PBMs, as well as processing the coupons pharmaceutical companies can issue directly to the patient for prescriptions filled at the pharmacy. Around the time of the proposed acquisition, Change had only one percent of the revenue of already gargantuan UHG. What Change had, nevertheless, was the valuable data in all those billing claims and remittances: patient IDs, provider IDs, diagnosis codes, procedure codes, and billed and allowed amounts—for ALL carriers, no less. That data could give UHG an advantage, for example, in quoting lower prices on commercial plans for fully insured employers with healthier employees, targeting lower-risk Medicare Advantage pools, or carving out a few expensive outlier physicians from the insurance network.

The DOJ tried to block the UHG-Change merger but failed. In its defense, UHG pointed to longstanding strict firewalls between Optum’s data analytics and UHC’s insurance underwriting that prevented access and use of sensitive claims information from competitor carriers. That and divestiture of one of Change’s claims edit products, a horizontal competitor to Optum, were enough to convince the district court to approve the merger.

But not all has been well. The February 2024 ransomware attack against Change left thousands of medical practices, hospitals, and pharmacies without incoming cash flow once claims processing shut down. At least one large clinic in Oregon, already in talks to merge with UHG, had to apply for and ultimately get emergency approval for its buyout after running out of cash. How convenient for UHG: as one headline aptly put it, “UnitedHealth Exploits an ‘Emergency’ It Created.”

In any case, will UHG’s so-called firewalls hold up over time? Are the pipes of the health care infrastructure UHG now controls “essential facilities” that should invoke that discarded stepchild of antitrust doctrine? At the very least, UHG has foreclosed any defense that there can be no intra-enterprise conspiracy here. As one researcher lauded, the secret to UHG’s power is that it has set up Optum as a fully autonomous, separate business with its own processes, resources, and profit streams, distinct from the insurance business. That sounds like a disunity of economic interest—which means any collusion, express or tacit, between the Optum and UHC subsidiaries of UHG would implicate Section 1 of the Sherman Act.

Where Do We Go From Here?

The DOJ did not appeal the district court’s judgment on the UHG-Change merger. But it appears the DOJ wasn’t done with UHG. In October 2023, the DOJ reopened an antitrust investigation into UHG’s business practices. And in November 2024, the DOJ along with Maryland, Illinois, New Jersey, and New York sued under a horizontal merger theory to block UHG’s proposed acquisition of Amedisys, the country’s largest home health and hospice provider. It remains to be seen what the antitrust stances of the DOJ and FTC will now be with the upcoming change in administration.

Whatever that change will bring, UHG is the Amazon warrior of the health care marketplace in America. As health care’s increasingly expanding operator, participant, and pipes, UHG reigns supreme over the exploding Medicare Advantage market. As UHG and the others big carriers continue to siphon Medicare Advantage volume away from traditional participants like hospitals, Bricker predicts those hospitals will have their go-to response: demand higher unit prices from the carriers on the commercial side. Who will subsidize those higher prices? The American employer and worker. And who gets hurt the most from the concentration of economic power in health care? Patients who can least afford it.

Sadly, all the charged rhetoric surrounding the UHG CEO shooting has distracted attention away from the real diagnosis here. What ails the American health care system is structural. It has everything to do with antitrust. And the American health care system is increasingly the UnitedHealth Group system.

With the cultural shift toward populism—whether conservative or progressive in bent—let’s hope that we can unite together and make our health care system less United.

Venu Julapalli is a practicing gastroenterologist and recent graduate of the University of Houston Law Center.

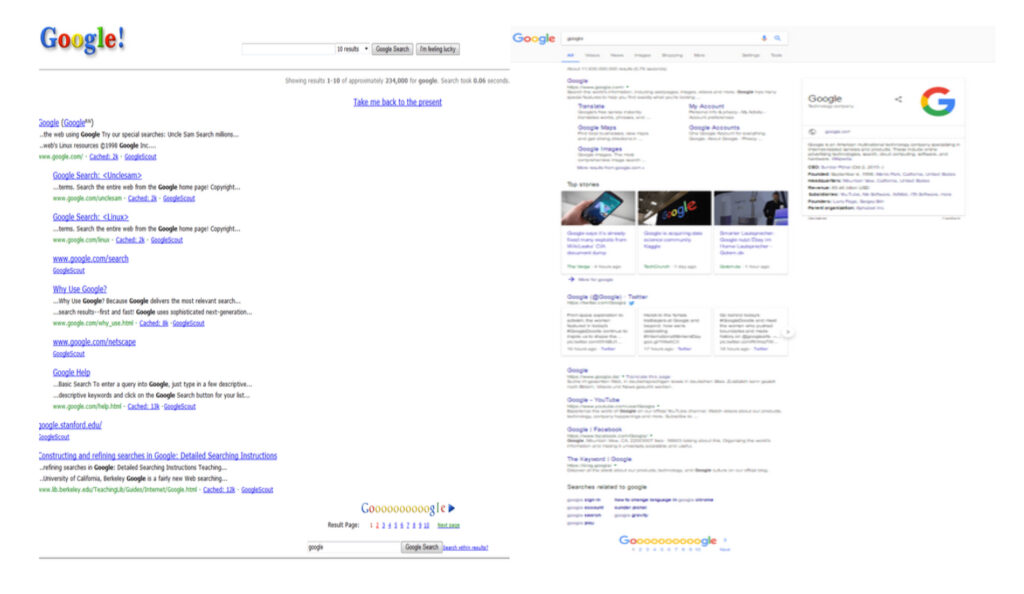

Professor Tim Wu, former White House advisor on antitrust, offered remedies following Judge Mehta’s decision in the U.S. Google Search case. He identified both Google’s revenue-sharing agreements that exclude competitors and its access to certain “choke points” as a basis for remedies. A divestment order of Chrome and the Android operating system was proposed, as well as an access remedy to Google’s browser, data and A.I. technologies.

It is hard to see how the transfer of a browser monopoly into others’ hands, however, would facilitate access and use of it. That could repeat the mistake of the AT&T 1984 divestiture order that transferred local access monopolies into separate ownership without creating any competitive constraint or pressure on those local business to innovate and compete. In a follow up article, Julia Angwin pointed out the fundamental problem being the Google search results pages, facing no competitive pressure, are now “a pulsing ad cluttered endless scroll,” which masks relevant results. Google’s ad-fuelled profit maximisation leads it to promote that which is remunerative over that which is more relevant.

Also, there remains a major issue with any access order: Will it be able to withstand future technology changes used by Google to circumvent their aims? A crucial issue in writing an order to a monopoly tech company to provide access to XYZ or supply XYZ interface, and the day after the order being written a technical change (or simply version control) making the order technically outdated and pointless.

Any remedy first needs to stop the infringement, prevent its reoccurrence and restore competition. So, the core problem now is to restore competition to the Google search monopoly. This means finding a competing consumer-facing search product that is ad-funded so that “free at the point of use” search can provide competitive pressure on Google’s own free at the point of use product. A possible optionis canvassed below.

The two-sided nature of the search market means any effective solution needs to create consumer-facing competition with Google Search pages and business-facing competition for Google’s Search Text Advertising offering. A starting point for remedies is then prohibiting the mechanisms used by Google that restricted competition from rivals. This means prohibiting the revenue-sharing and default-setting deals with Apple and other technology and telecoms companies that have acted as a moat to protect Google’s Search “castle.” However, restoring effective competition going forward also means enabling the use of data inputs and alternative access points (such as the browser) so competing search ads face competitive price pressure.

The proposal below is inspired by the BT Openreach settlement (and prior BT Consent decree). BT proposed an access remedy, which applied to the local loop. Non-discriminatory access to BT’s local loop (Openreach) business was supplied to third parties on the same terms as it was supplied to downstream parts of BT. The obligation applied to the BT Group of companies and its internal divisions, and corporate structure was subject to non-discrimination both on supply and use. This improved upon the AT&T divestiture remedy, which was in operation in the United States at the time. Avoiding the risk of technology change also means taking account of an often-overlooked Consent Decree, which was agreed among BT/MCI/Concert and the DOJ in 1994. That decree broke new ground as it imposed a non-discriminatory “use” obligation on the recipient of services supplied by the monopoly supplier. A similar obligation on non-discriminatory use of inputs could apply to the use by Google of inputs and would apply overtime irrespective of the technical means of supply.

Scale of Google’s data inputs and sunk investments costs

Google now has unrivalled scale in data acquired from billions of users millions of times per day when they interact with Google’s many products. That data is obtained from its ownership of Chrome, the dominant web browser, providing Google with unrivalled browser history data. It also uses other interoperable code (such as that stored in cookies) to check which websites browsers have visited and has an unparalleled understanding of consumers interests and purchasing behavior. Per Judge Mehta’s Memorandum Opinion in USA v Google (Search), data from billions of search histories provides it with “uniquely strong signals” of intent to purchase data that is combined with all data from all other interactions with all of Google’s many products (see trial exhibit of Google presentation: Google is magical). Its knowledge from all data inputs is combined to provide it with high quality information for advertising. The Memorandum Opinion recognizes that “more users mean more advertisers and more advertisers mean more revenues,” and “more users on a GSE means more queries, which in turn means more ad auctions and more ad revenue.” These positive feedback loops suggest increasing returns to scale and returns to the scope of a range of products offered over the same platform using artificial intelligence as part of its systems. It has built one of the most recognized and valuable brands in the world.

The costs facing any competitor seeking to make an entirely new search engine from scratch are now enormous. This is referenced in evidence as the “Herculean problem.” Reference is made to the many billions of dollars that would be needed by Apple to build a new search engine of its own.

Any restoration of competition will now have to overcome these very considerable advantages and sunk costs, while at the same time competing with Google as the established, and well-knownsupplier of the best search engine in the world. That point about the costs being “sunk” for Google but not new entrants will be returned to below.

The Memorandum Opinion refers to the uniqueness of Google’s Search and the search access choke points many times. Access to these unique facilities must now be on the cards as a remedy.

Third party access to data inputs, match keys and access points to support effective competition in “free at the point of use search results businesses”

Google uses data inputs to identify the user’s “purchasing intent” that inform its ads machine. Data inputs are combined from multiple consumer interactions with others digital content and has enabled Google to charge high prices for its search text ads. Google’s Search engine consists of at least three key components: (1) an index of media owner content cataloged by a web crawler, (2) a “relevance engine” to match consumer input to this catalog, (3) ranking and monetization of the search engine results. At a technical level, the online display advertising system relies on match keys that enable the matching of demand for ad inventory to match a supplier of ad inventory. Third parties need access to these data inputs currently uniquely available to Google, to derive user’s purchasing intent. Competing rivals could then employ the input data and match keys to match inventory supporting display advertising and competing search page results businesses using Google’s relevance engine.Use of such inputs would help drive down prices for ads in competing search businesses.

Access points for search businesses include the Chrome browser. Here, the idea that the browser could be quarantined, as suggested by Professor Wu, could be picked up. The browser would also need to be monitored so that it provides a neutral gateway to the web. It is a choke point that can be enhanced with additional functionality – a wallet in the browser substitutes for decentralized wallets that could otherwise be deployed by competing websites. As was the case with the 1956 AT&T consent decree, AT&T was prevented from competing in areas that were open to competition – so too Google could be prevented from adding functionality to its gateway that could be provided by others elsewhere on the web. The browser then loses its position as gateway controller and becomes a neutral window on the web.

Google is owned by Alphabet so there is an opportunity to apply an obligation to Alphabet not to discriminate in the supply of its relevance engine as between Google and third parties rather than its Search system as a whole. That would enable competition between pages and page presentations offered by different businesses. It would overcome the enormous costs and “Herculean” task of creating a new search engine from scratch. New players might then be tempted to enter that business and resell Alphabet’s relevance engine results combined with its own ads or ads from third parties, which would increase price pressure on search ads.

Currently, 80 percent of the SERP is composed of advertising of one sort or another. Enabling competition in the provision of search results could avoid the morass of current search pages and encourage both quality and price competition. This could benefit both consumers and advertisers.Alternative search businesses could be expected to innovate in the way that they provide and present ads; higher proportions of the results pages could be composed of relevant results and fewer ads. If competing businesses had access to Alphabet’s relevance engine and data inputs they could use them for their own advertising, introducing price pressure on Google’s search text ads. New entrants could be expected to finance their businesses quickly given that they would be reselling a proven search product.

Availability of distribution deals with Google’s revenue sharing partners

The current agreements with Apple, OEMs and telecoms carriers operate as exclusive agreements. They contain contractual restrictions in the form of default settings and revenue sharing payments,which incentivize the parties to promote Google Search Ads. The scale of the payments operates as a disincentive and prevents the parties from offering products competing with Google in search.

Removal of only the contractual default setting is likely to be insufficient to end the anticompetitive effect of the agreements and would go no way to restoring competition. The sharing of revenue from Google’s Search advertising must end if competition between new search advertising players is to be established.

Ending the current distribution deals on a revenue-sharing basis creates a problem of what is an acceptable replacement deal. If Google products are to compete on their merits no restriction at all should be imposed on distributors from providing competing alternatives. However, Google’s distributors will still need to be paid for distribution and the volumes and scale of payments is so large that current recipients are still likely to only sell Google products, even if the restrictive provisions are removed. They are unlikely to take the risk of backing a competitor search product if some form of competition in search is not restored. If access to Alphabet’s relevance engine is mandated as described above that would also help to restore competition at the distributor level.

A proposed access remedy needs to underpin the restoration of competition

A remedy order applicable to Alphabet could provide access to an independent and quarantined browser (access point) and search relevance engine. That would not restore competition alone. Overcoming the considerable barrier to entry of a new entrant seeking to build its own relevance engine and attracting new users while competing with Google is very hard. It is currently prohibitive,even for Apple.

When considering the issue further, it is important to appreciate that:

• The relevance engine and index are currently both organizationally and technically separate from the ads and ad network organization.

• Search is currently optimized by people working in a search business. There are separate groups of people that work on products and separate organizations for advertising.

• Alphabet’s products (news, maps, images, shopping, etc.) are interweaved between relevant search results when the page is presented to end users. An effective remedy could build on these existing organizational and business boundaries.

If third party competitors could access the relevance engine and its index on non-discriminatory terms, they may be able to create effective competition between new “Search Engine Results” businesses. Those businesses would access the substantial sunk investments already made in optimizing search relevant to users’ needs and overcome the substantial “Google” brand value. As noted above, that investment is sunk for Google but represents a considerable barrier to entry for others. Since much of that value has been obtained illegally, there would be a case for stripping Alphabet of that value. Perhaps a better solution here would be to enable the use of the brand to support entry. New competitors would be known to be using Google’s world-renowned relevance engine. The established reputation for quality would help entry. As this is central to restoring competition compensation for use is then a non-issue.

Moreover, Google currently offers access to its relevance engine to companies (like Duck Duck Go) that would resell them, so cannot easily suggest that the above proposal is unworkable.

How the proposal addresses technology changes over time

The law has been broken through the denial of access to data inputs and choke points, and thusdeprived rivals of scale. No other players have sufficient scale to replicate Google’s position. Access to the same data that is used in Google Ads would be a starting point for competitors to create competing search ads from. The solution is access to the IDs and the data inputs that Alphabet uses to fund its search business. Obligations can be crafted to access data feeds for non-discriminatory use of whatever Google uses.

To be clear, there are two critical data feeds that will be needed for competitors to function: (1) Access to the Google relevance engine. This would enable competitors to offer a highly relevant search product. Results would be from a proven and established, world renowned and high-quality source; and (2) Access to the data inputs and advertising IDs and match key data, which are used in Google search ads to identify purchasing intent that can be matched with available advertising inventory.

As a matter of U.S. law and practicality, a non-discrimination obligation on usage can be contained in an order addressed to Google as the user of a search engine or data source owned by Alphabet. As a usage–based non-discrimination obligation applicable to the user of assets owned by the head company, Alphabet, it is materially different from a requirement to supply. There is less of a risk of it offending the case law that defers to businesses deciding whether and with whom they contract – it is instead a requirement not to discriminate between what is received by Google’s search business and what is received by third parties’ search businesses. If Google’s monetization of search results uses no inputs from its relevance engine or data hoard, then it would have no obligation to supply.Conveniently the Alphabet holding company could also be the addressee of the obligation, as was the case with BT Group and its operating corporate entities such as Openreach.

Note that this approach also better addresses the issue of technology change over time. The more usual divestiture order and access obligation suffers from technology being defined at the time the order is written. Since it must be written as a remedy to a defined problem and so if the harm was bundling of interoperability or lack of access to XYZ APIs, then the order mandates unbundling and a requirement to supply XYZ APIs. If a new API is invented that achieves a same end by different means, or a new technology is introduced, there will not have been any case against the defendant for abuse with relation to that new API or technology and no order can easily force the supply of the new API. By contrast, where the addressee of the order is in the same group as the supplier an order can be crafted in terms of non-discrimination in the use of the monopoly asset owned by the group head company and used by a functionally separate business.

Conclusions

This essay addresses the core problem for effective remedies identified in USA v Google (Search). Any remedy needs to address the scale of Google’s data inputs and sunk investments. This is remedied by providing third party access to data inputs and access points to support effective competition in “free at the point of use search results” but would also create competing ads businesses with pressure on ads prices. The current distribution and revenue-sharing partners need to be prohibited. The proposed access remedy enables the creation of competition between rival search engine results businesses, imposing market discipline on the promotion and presentation of search results. The proposal addresses technology changes over time by drawing on lessons learned from divestiture in telecommunication and from ensuing that non-discrimination in usage of key inputs is the focus of the remedy.

Additionally, allowing Alphabet to continue to own its browser (even if quarantined) and provide access to search access points means that capital funding will continue to be in the interests of the Alphabet group. Divestiture would otherwise place monopoly assets in others’ hands with incentives to raise price and degrade quality for all those seeking to use them. Funding of divested assets that are currently cost centres in a vertically integrated business would otherwise also be a major issue to overcome. Here, the proposed non-discrimination remedy bites in a different way – so that technology change is not a problem with this type of remedy.

The approach described here would need to be coupled with transparency obligations such that third parties have visibility of what data inputs the Google Search Co receives so that they can make comparisons. Agreements between different divisions of Alphabet – whether partially in separate ownership or otherwise – can be entered into between different corporate entities within Alphabet to more effectively enable oversight across both a corporate and technical boundary. If done carefully, addressing technology, financial and commercial terms, and the scope for technology change circumventing the remedy can be managed. In effect, it would aim to make the remedy future proof.

On the fourteenth of August in the year 2024, The Sling’s humble scribe came into possession of a facsimile of a transcript meticulously typed up by a certain Court Reporter—by way of an avowed acquaintance of the loyal manicurist of said reporter—in the heart of that certain city renowned for its association with that certain Saint, the inimitable bird-bather and wolf-tamer called Francis of Assisi. This impeccable chain of custody establishes beyond reproach the provenance of the narrative contained within the transcript, which itself proclaims an association with that certain hearing in a Court of Judicature in turn associated with the manifold possibilities of crafting a remedy equitably suited to those various monopolistic machinations pertaining to certain shops bearing applications on assorted devices in possession of a telephonic nature.

In the following rendition, all needless matters have been excised, and all excerpts chosen are unerringly and exactly contemporary. So able was the Court Reporter’s work that, in truth, very little was left to the scribe’s editorial discretion but mere clippery, with a few modest extra touches. Indeed, the task could have been delegated to an electronic golem but for the regrettable necessity of forestalling that certain kind of liability associated with counseling readers to engage in nonstandard culinary practices.

Google’s closing argument went… a little something… like this…

Google’s lead attorney, Glenn Pomerantz (henceforth “Google”): Judges shouldn’t be central planners!

Judge James Donato: I totally agree.

Google: Judges shouldn’t micromanage markets!

Judge: I totally agree.

Google: If you order Google to list other app stores on Play, with some interoperability features, you’re a scary unAmerican Soviet central planner.

Judge: Nope.

Google: Yes, you are!

Judge: Not a Communist. Not even a little bit.

Google: Yes, you are!

Judge: Am decidedly not.

Google: Are decidedly too!

Judge: Anything else you’d like to add?

Google: This order would make you a micromanager of markets.

Judge: I’m not telling anyone which APIs to use. There will be a technical monitor.

Google: Then the technical monitor is an unAmerican micromanager!

Judge: Is not.

Google: Is too!

Judge: Let’s move on.

Google: Yes, my next slide says we must march through the case law. The case law says… drumroll! …that central planning is bad.

Judge: I totally agree! That’s why I’m not doing it.

Google: Yes, you are.

Judge: No, I’m not. My order will be three pages long. Focused on general principles.

Google: But you’ll have to rule on disputes the technical monitor can’t resolve—super detailed technical things, possibly beyond human understanding.

Judge: Still not a Communist.

Google: Well, let’s not forget the *life-changing magic* of Google’s *amazing* origin story. Google was pretty much the maverick heroic Prometheus of the Information Superway.

Judge: I totally agree!

Google: You do?

Judge: Yes. Google had superior innovation. Success is not illegal. What’s illegal is then building a moat through anticompetitive practices.

Google: You want to impose these mean remedies because you hate Google.

Judge: Not at all. And this isn’t about me; I’m charged with the duty to impose a remedy based on a jury verdict. I have to follow through on the jury’s conclusion that Google illegally maintained a monopoly over app stores.

Google: That’s central planning!

Judge: Still not a Communist.

Google: I never said you were a Communist.

Judge: Is “central planning” just your verbal tic then? Like um or uh?

Google: Maybe. I’ll have it checked out.

Judge: Nondiscrimination principles and a ban on anticompetitive contract terms are time-tested, all-American, non-Communist remedies.

Google: You know how some people are super-bummed they were born after all the great bands?

Judge: What, are you saying you miss Jimi Hendrix?

Google: That’s more Apple’s thing. What I think about, late at night, is how tragic it is that Joseph McCarthy died so young.

Judge: Huh, Wikipedia says 48. That *is* kind of young.

Google: Thank you for taking judicial notice of that. By the way, have you ever read Jorge Luis Borges?

Judge: Do I look like someone who reads Borges?

Google: Your Honor, Borges had this story about an empire where “the art of cartography was taken to such a peak of perfection” that its experts “drew a map of the empire equal in format to the empire itself, coinciding with it point by point.”

Judge: Do you have a point?

Google: The map was the same size as the empire itself! Isn’t that amazing? We think remedies need to be just like that. Every part of a remedy needs to be mapped onto an exact twin causal anticompetitive conduct.

Judge: That’s not the legal standard for prying open markets to competition. If I don’t grant Epic’s request, what should I do instead?

Google: Instead of Soviet-style success-whipping, the court should erect a statue to my memory. Or at the very least, overrule the jury.

Judge: That’s up to the appeals court now. We’re here to address remedies. You tell me, what’s an appropriate remedy for illegal maintenance of monopoly?

Google: Nothing.

Judge: Not an option.

Google: Okay, look, we’re open to reasonable compromise here: how about a remedy that sounds like something… but is actually nothing?

Judge: What would the point of winning an antitrust case be then? Why would anyone put in all that time and money and effort to bring a case?

Google: Exposure.

Judge: Your competitors aren’t millennial influencers hoping to pay rent with “likes.” They need ways to earn actual legal tender through vigorous competition.

Google: Your Honor, respectfully, legal tender is central planning.

Judge: I guarantee you my order will not touch monetary policy with a ten-foot pole.

Google: Very well but as you can see it is important to start from first principles when debating remedies. Before we do anything rash that could ruin smartphones, crash the entire internet, and send the nuclear triad on a one-way trip to Soviet Communist Russia, we need to take a step back and ask ourselves “What even *is* an app store?”

Donato: Hell no we don’t. We’ve been through *four years* of litigation and a full jury trial. This is no time to smoke up and get metaphysical…

Google: Out, out damn central planner!

Judge: Was that outburst medical or intentional?

Google: Both.

Court Reporter: Can we wrap this up? I’m running late for my manicure.

Judge: I’ve heard enough. I’m mostly going to rule against Google. But there was one part of your argument that I *did* find extremely compelling, and I will rule for Google on that point.

Google: Really?

Judge: Yes, and you put it best on your own website, so I’ll let that record speak for itself: https://tinyurl.com/neu4weu2

Ed. note: Six days later, acting upon advice from a Google search snippet, Soviet troops invaded the courtroom, seeking political asylum.

Laurel Kilgour wears multiple hats as a law and policy wrangler—but, and you probably know where this is going—not nearly as many hats as Reid Hoffman’s split personalities. The views expressed herein do not necessarily represent the views of the author’s employers or clients, past or present. This is not legal advice about any particular legal situation. Void where prohibited.

In late May, the New York Times ran a story by Eric Lipton titled “Elon Musk Dominates Space Launch. Rivals Are Calling Foul.” In response, the antitrust community largely shrugged its shoulders. I went back and give it a read, along with related stories in the Wall Street Journal (“Elon Musk’s SpaceX Now Has a ‘De Facto’ Monopoly on Rocket Launches”), the Washington Post (“SpaceX could finally face competition. It may be too late.”), and CNBC (“SpaceX’s near monopoly on rocket launches is a ‘huge concern,’ Lazard banker warns”). Having reviewed the theories of competitive harm and the publicly available evidence, I conclude that there is a monopolization case worth pursuing here.

Lipton’s piece in the Times contained two noteworthy allegations (emphasis added):

Jim Cantrell worked with Mr. Musk at the founding of SpaceX in 2002. When he started to build his own launch company, Phantom Space, two potential customers told his sales team they could not sign deals because SpaceX inserts provisions in its contracts to discourage customers from using rivals.

Peter Beck, an aerospace engineer from New Zealand, met in 2019 with Mr. Musk to talk about Mr. Beck’s own launch company, called Rocket Lab. Several months later, SpaceX moved to start carrying small payloads at a discounted price that Mr. Beck and other industry executives said was intended to undercut their chances of success.

The first allegation refers to what economists consider an exclusionary contract: You can buy from me only if you commit to not buying from my rival. Other exclusionary provisions include demanding that buyers fulfill a large portion of their needs with the seller or that buyers give the seller a right to match. The second allegation sounds like predation, which requires pricing below a firm’s incremental costs and a likely chance of recoupment. Both are well-recognized restraints of trade that can generate anticompetitive effects under certain conditions, the first of which is when the restraint is employed by a dominant firm.

SpaceX is dominant in space transportation

Firms that are not dominant in a market can engage in exclusionary tactics without fear of exposing themselves to antitrust scrutiny. It is the combination of market power plus an exclusionary restraint that generates anticompetitive effects. Obtaining market shares on a privately held company like SpaceX, is admittedly difficult. But the New York Times story tell us that in 2023, “SpaceX secured $3.1 billion in federal prime contracts, according to the data, nearly as much as the combined amount the federal government committed for space transportation and related services from its nine competitors, from giants like Boeing and Northrop Grumman to startups like Blue Origin.” This statistic implies that, at least as a share of government spending for space transportation, SpaceX commands nearly a 50 percent share. The article also tell us that “SpaceX’s 96 successful orbital launches during 2023 contrast with seven launches to orbit from the U.S. in total last year by all of SpaceX’s competitors,” indicating a share of over 93 percent when measured in terms of launches. In the same story, Musk himself reckons that as of 2023, SpaceX delivered 80 percent of the world’s cargo to space. According to BryceTech, in the fourth quarter of 2023, SpaceX lifted nearly 90 percent of all pounds sent into orbit. Any share in this range (50 to 93 percent) would be consistent with dominance, particularly when combined with evidence of entry barriers.

SpaceX’s market share is protected by entry barriers

By the time SpaceX launched its 63rd mission of 2023, ULA, the next largest U.S. rocket competitor, had completed just two launches. Each rocket launch leads to new data, the same way that each drive by a Tesla owner gave Tesla new information over its electric vehicle rivals. (A similar incumbency advantage owing to learning economies prompted policymakers to endorse subsidizing charging stations and even forcing Tesla to open its stations to EV rivals.) The Washington Post story has a line from the CEO of Firefly Aerospace that supports this effect: “You could see a scenario where one provider has such a lead … that it is literally impossible to catch up on the order where there will be true competition.” Moreover, SpaceX has “deep ties to NASA and the Pentagon, which have awarded it billions of dollars in contracts and elevated it to prime contractor status.”

There are myriad other natural barriers to entry:

- High fixed costs: Lars Hoffman, then senior vice president at Rocket Lab, estimated in 2020 that it cost $100 million to get a rocket to its first launch;

- Long development periods: Development periods of at least three to five years for rockets are common. Blue Origin’s first orbital launch is more than three years behind schedule and now planned for later this year; and

- Strategic launch schedules: According to the Wall Street Journal, “SpaceX’s grip on the launch business means many government agencies and satellite operators must tether their ambitions to the company’s timetables and capabilities.” Per the Washington Post, “SpaceX’s perch atop the industry has allowed it to dictate timelines and prices for satellite launches that favor its launch cadence and schedule, industry officials said.”

In an attempt at journalistic balance, Lipton suggests that competitive entry is picking up despite these natural impediments:

Jeff Bezos’ Blue Origin is close to its first launch for its New Glenn rocket. RocketLab is building what it calls Neutron, and Relativity Space is working on its TerranR, among other new entrants. After years of delays, Boeing is soon expected to start launching NASA astronauts into space on its new Starliner spacecraft.

Lipton ultimately concludes, however, that the ability of the United States to reach orbit in the near term “remains largely dependent on Mr. Musk and his Falcon 9 rocket.” The aforementioned high fixed costs, long development periods, and strategic launch schedules can counter any evidence of initial entry. Even if these natural barriers could be overcome, entrants would still have to hurdle the artificial barriers erected by SpaceX’s two forms of exclusionary conduct.

SpaceX’s ride-sharing program might be predatory

Recall that Mr. Beck of Rocket Lab alleged that SpaceX started carrying small payloads at a discounted price that Rocket Lab could not match. Here’s more on the predation allegation from the New York Times:

[Beck] and other industry executives said they were convinced that SpaceX had set the price for its Transporter service — where small satellite companies can book slots on a Falcon 9 launch — with the explicit goal of undermining the financial plans of emerging competitors. Transporter’s low price — initially $5,000 per kilogram — was below what some industry executives calculated was SpaceX’s basic cost. They concluded that SpaceX could only offer such a low price by subsiding those flights with some of its government contracting revenue.

Beck also asserted that SpaceX was selling flights on its new Bandwagon service, which offers satellite makers launches to orbits that provide them better coverage over key sections of the world, “far below its own costs to undermine its competition.”

To know whether such pricing is in fact predatory, one must estimate the incremental (that is, avoidable) cost for SpaceX’s ride-sharing missions. Adding one payload to a rocket likely imposes no incremental costs for SpaceX. Thus, the test should be performed on a per launch basis.

The best estimate of SpaceX’s marginal costs per launch comes from Musk himself at $15 million under a “best-case” scenario. But that number excludes other avoidable costs, including “the costs to refurbish the first stage rocket booster, and the cost to recover and refurbish fairings.” Musk also claims that, with regard to manufacturing costs, SpaceX incurs “$10 million to manufacture a new upper stage [rocket] and that this stage represents about 20 percent of the cost of developing the rocket.” If SpaceX replaces this upper-stage rocket every mission, then the incremental costs are $25 million.

Turning to the revenue side of the equation, SpaceX’s average incremental revenue per launch has declined to roughly $22.5 million (equal to $300k per payload times the average of 75 payloads per launch). This would not cover the incremental costs estimated above, and to the extent these numbers are accurate, would be predatory. Of course, these estimates are based on publicly available information. An antitrust agency pursuing an investigation would be able to obtain more precise estimates.

I also find the evidence on the likelihood of recoupment to be highly persuasive. The Washington Post story offers this line on ride-sharing: “One example of how SpaceX made it tough on competitors was its move a few years ago to launch smaller satellites in bunches at very low prices in a ‘rideshare program’ that was seen in the industry as a tactic to target smaller launch companies such as Rocket Lab by taking away customers.” The aforementioned evidence of the high fixed costs and long development periods also make recoupment more likely. Finally, the rocket industry is subject to considerable scale economies, so any practice that denies rivals the ability to achieve scale could be seen as exclusionary and consistent with the classic raising-rivals’-cost framework.

SpaceX’s contracts with customers seem to be exclusionary

The second potentially anticompetitive restraint employed by SpaceX is exclusionary provisions in contracts with its customers, comprised largely of government agencies and satellite companies (many of whom compete against Starlink). Here is a little more detail from the New York Times on SpaceX’s contracting:

Mr. Cantrell, whose company Phantom Space has received funding from NASA to help build its new launch vehicle, said his sales team had been told by Sidus Space and a second company that SpaceX had demanded contract provisions intended to limit their ability to hire other launch providers.

Carol Craig, the chief executive of Sidus Space, confirmed in an interview that SpaceX had a “right of first refusal” provision in a deal she had signed for five launches, allowing SpaceX to counter any offers from its competitors.

A right of first refusal, sometimes called a right to match, can foreclose competition to the extent it discourages rivals from making competitive offers to the customer. Why would a rival launch provider bother formulating a costly bid if the incumbent (SpaceX) can end the competition by simply matching the rival’s offer? Economists recognize that such provisions can generate anticompetitive effects when employed by a dominant firm and when the associated “foreclosure share” is economically significant (typically over 30 percent).

The foreclosure share, as the name suggests, is the share of the market that is foreclosed by an exclusionary contract. Consider a market in which a dominant firm supplies 80 percent of the market and half of its customers buy pursuant to a contract that contains the exclusionary provision. In that case, the foreclosure share would be 40 percent (equal to the product of 80 percent market share and 50 percent of customers with the provision). To the extent that most (or all) of SpaceX’s customers have such a provision in their contracts, the foreclosure share should easily clear the 30 percent threshold.

SpaceX could be favoring its own satellite broadband company

Predation and exclusionary contracting fit squarely within antitrust’s orbit (pun intended). Self-preferencing, on the other hand, is harder to police. A classic example is Amazon favoring its own merchandise over that of a rival merchant. SpaceX might be distorting competition in satellite broadband, a vertically related service to rocket launches. That satellite broadband rivals like OneWeb, Kacific, and Echostar rely on SpaceX for launching into space raises natural concerns about preferencing SpaceX’s affiliated satellite broadband company (Starlink). Per the Wall Street Journal story: “’It’s of course a very uncomfortable situation, where you have a supplier that wanted to go down the value chain and start competing with its own customers,’ said Christian Patouraux, chief executive at Kacific, a satellite internet company focused on Asia and the Pacific region. SpaceX launched a satellite for Kacific in 2019.”

Musk insists that SpaceX charges unaffiliated satellite broadband rivals the same as others, but query what SpaceX is charging Starlink (if anything) for launches. Ownership of Starlink also creates a conflict for SpaceX when it comes to scheduling launches for customers: “If Starship doesn’t ramp up as expected, there will likely be a shortage unless SpaceX allocates more of its Falcon fleet for customers instead of Starlink.”

Will the agencies launch a case?

SpaceX’s exclusionary contracts with customers have all the markings of an anticompetitive restraint. While predation cases are rare, SpaceX’s pricing seems oddly low relative to its incremental costs, and the chance of recoupment is high. If an antitrust agency were considering filing a Section 2 complaint against SpaceX, it should push the boundaries by challenging SpaceX’s self-preferencing as well.

Rocket launches are considered a must-have input in the process of transporting satellites, spacecraft, and astronauts in orbit. The launch industry is important to U.S. national security, and the defense agencies should aim to avoid making the government overly dependent on a monopolist, especially a predator. For the foregoing reasons, the antitrust case against SpaceX might soon have liftoff.

The Justice Department’s pending antitrust case against Google, in which the search giant is accused of illegally monopolizing the market for online search and related advertising, revealed the nature and extent of a revenue sharing agreement (“RSA”) between Google and Apple. Pursuant to the RSA, Apple gets 36 percent of advertising revenue from Google searches by Apple users—a figure that reached $20 billion in 2022. The RSA has not been investigated in the EU. This essay briefly recaps the EU law on remedies and explains why choice screens, the EU’s preferred approach, are the wrong remedy focused on the wrong problem. Restoring effective competition in search and related advertising requires (1) the dissolution of the RSA, (2) the fostering of suppressed publishers and independent advertisers, and (3) the use of an access remedy for competing search-engine-results providers.

EU Law on Remedies

EU law requires remedies to “bring infringements and their effects to an end.” In Commercial Solvents, the Commission power was held to “include an order to do certain acts or provide certain advantages which have been wrongfully withheld.”

The Commission team that dealt with the Microsoft case noted that a risk with righting a prohibition of the infringement was that “[i]n many cases, especially in network industries, the infringer could continue to reap the benefits of a past violation to the detriment of consumers. This is what remedies are intended to avoid.” An effective remedy puts the competitive position back as it was before the harm occurred, which requires three elements. First, the abusive conduct must be prohibited. Second, the harmful consequences must be eliminated. For example, in Lithuanian Railways, the railway tracks that had been taken away were required to be restored, restoring the pre-conduct competitive position. Third, the remedy must prevent repetition of the same conduct or conduct having an “equivalent effect.” The two main remedies are divestiture and prohibition orders.

The RSA Is Both a Horizontal and a Vertical Arrangement

In the 2017 Google Search (Shopping) case, Google was found to have abused its dominant position in search. In the DOJ’s pending search case, Google is also accused of monopolizing the market for search. In addition to revealing the contours of the RSA, the case revealed a broader coordination between Google and Apple. For example, discovery revealed there are monthly CEO-to-CEO meetings where the “vision is that we work as if we are one company.” Thus, the RSA serves as much more than a “default” setting—it is effectively an agreement not to compete.

Under the RSA, Apple gets a substantial cut of the revenue from searches by Apple users. Apple is paid to promote Google Search, with the payment funded by income generated from the sale of ads to Apple’s wealthy user base. That user base has higher disposable income than Android users, which makes it highly attractive to those advertising and selling products. Ads to Apple users are thought to generate 50 percent of ad spend but account for only 20 percent of all mobile users.

Compared to Apple’s other revenue sources, the scale of the payments made to Apple under the RSA is significant. It generates $20 billion in almost pure profit for Apple, which accounts for 15 to 20 percent of Apple’s net income. A payment this large and under this circumstance creates several incentives for Apple to cement Google’s dominance in search:

- Apple is incentivized to promote Google Search. This encompasses a form of product placement through which Apple is paid to promote and display Google’s search bar prominently on its products as the default. As promotion and display is itself a form of abuse, the treatment provides a discriminatory advantage to Google.

- Apple is incentivized to promote Google’s sales of search ads. To increase its own income, Apple has an incentive to ensure that Google Search ads are more successful than rival online ads in attracting advertisers. Because advertisers’ main concern is their return on their advertising spend, Google’s Search ads need to generate a higher return on advertising investment than rival online publishers.

- Apple is incentivized to introduce ad blockers. This is one of a series of interlocking steps in a staircase of abuses that block any player (other than Google) from using data derived from Apple users. Blocking the use of Apple user data by others increases the value of Google’s Search ads and Apple’s income from Apple’s high-end customers.

- Apple is incentivized to block third-party cookies and the advertising ID. This change was made in its Intelligent Tracking Prevention browser update in 2017 and in its App Tracking Transparency pop-up prompt update in 2020. Each step further limits the data available to competitors and drives ad revenue to Google search ads.

- Apple has a disincentive to build a competing search engine or allow other browsers on its devices to link to competing search engines or the Open Web. This is because the Open Web acts as a channel for advertising in competition with Google.

- Apple has a disincentive to invest in its browser engine (WebKit). This would allow users of the Open Web to see the latest videos and interesting formats for ads on websites. Apple sets the baseline for the web and underinvests in Safari to that end, preventing rival browsers such as Mozilla from installing its full Firefox Browser on Apple devices.

The RSA also gives Google an incentive to support Apple’s dominance in top end or “performance smartphones,” and to limit Android smartphone features, functions and prices in competition with Apple. In its Android Decision, the EU Commission found significant price differences between Google Android and iOS devices, while Google Search is the single largest source of traffic from iPhone users for over a decade.

Indeed, the Department of Justice pleadings in USA v. Apple show how Apple has sought to monopolize the market for performance smartphones via legal restrictions on app stores and by limiting technical interoperability between Apple’s system and others. The complaint lists Apple’s restrictions on messaging apps, smartwatches, and payments systems. However, it overlooks the restrictions on app stores from using Apple users’ data and how it sets the baseline for interoperating with the Open Web.

It is often thought that Apple is a devices business. On the contrary, the size of its RSA with Google means Apple’s business, in part, depends on income from advertising by Google using Apple’s user data. In reality, Apple is a data-harvesting business, and it has delegated the execution to Google’s ads system. Meanwhile, its own ads business is projected to rise to $13.7 billion by 2027. As such, the RSA deserves very close scrutiny in USA v. Apple, as it is an agreement between two companies operating in the same industry.

The Failures of Choice Screens

The EU Google (Search) abuse consisted in Google’s “positioning and display” of its own products over those of rivals on the results pages. Google’s underlying system is one that is optimized for promoting results by relevance to user query using a system based on Page Rank. It follows that promoting owned products over more relevant rivals requires work and effort. The Google Search Decision describes this abuse as being carried out by applying a relevance algorithm to determine ranking on the search engine results pages (“SERPs”). However, the algorithm did not apply to Google’s own products. As the figure below shows, Google’s SERP has over time filled up with own products and ads.

To remedy the abuse, the Decision compelled Google to adopt a “Choice Screen.” Yet this isn’t an obvious remedy to the impact on competitors that have been suppressed, out of sight and mind, for many years. The choice screen has a history in EU Commission decisions.

In 2009, the EU Commission identified the abuse Microsoft’s tying of its web browser to its Windows software. Other browsers were not shown to end users as alternatives. The basic lack of visibility of alternatives was the problem facing the end user and a choice screen was superficially attractive as a remedy, but it was not tested for efficacy. As Megan Grey observed in Tech Policy Press, “First, the Microsoft choice screen probably was irrelevant, given that no one noticed it was defunct for 14 months due to a software bug (Feb. 2011 through July 2012).” The Microsoft case is thus a very questionable precedent.

In its Google Android case, the European Commission found Google acted anticompetitively by tying Google Search and Google Chrome to other services and devices and required a choice screen presenting different options for browsers. It too has been shown to be ineffective. A CMA Report (2020) also identified failures in design choices and recognized that display and brand recognition are key factors to test for choice screen effectiveness.

Giving consumers a choice ought to be one of the most effective ways to remedy a reduction of choice. But a choice screen doesn’t provide choice of presentation and display of products in SERPs. Presentations are dependent on user interactions with pages. And Google’s knowledge of your search history, as well as your interactions with its products and pages, means it presents its pages in an attractive format. Google eventually changed the Choice Screen to reflect users top five choices by Member State. However, none of these factors related to the suppression of brands or competition, nor did it rectify the presentation and display’s effects on loss of variety and diversity in supply. Meanwhile, Google’s brand was enhanced from billions of user’s interactions with its products.

Moreover, choice screens have not prevented rival publishers, providers and content creators from being excluded from users’ view by a combination of Apple’s and Google’s actions. This has gone on for decades. Alternative channels for advertising by rival publishers are being squeezed out.

A Better Way Forward

As explained above, Apple helps Google target Apple users with ads and products in return for 36 percent of the ad revenue generated. Prohibiting that RSA would remove the parties’ incentives to reinforce each other’s market positions. Absent its share of Google search ads revenue, Apple may find reasons to build its own search engine or enhance its browser by investing in it in a way that would enable people to shop using the Open Web’s ad funded rivals. Apple may even advertise in competition with Google.

Next, courts should impose (and monitor) a mandatory access regime. Applied here, Google could be required to operate within its monopoly lane and run its relevance engine under public interest duties in “quarantine” on non-discriminatory terms. This proposal has been advanced by former White House advisor Tim Wu:

I guess the phrase I might use is quarantine, is you want to quarantine businesses, I guess, from others. And it’s less of a traditional antitrust kind of remedy, although it, obviously, in the ‘56 consent decree, which was out of an antitrust suit against AT&T, it can be a remedy. And the basic idea of it is, it’s explicitly distributional in its ideas. It wants more players in the ecosystem, in the economy. It’s almost like an ecosystem promoting a device, which is you say, okay, you know, you are the unquestioned master of this particular area of commerce. Maybe we’re talking about Amazon and it’s online shopping and other forms of e-commerce, or Google and search.